Google’s MedGemma: Open-Source Medical AI for Imaging, EHR, and Beyond

🧬 What is MedGemma?

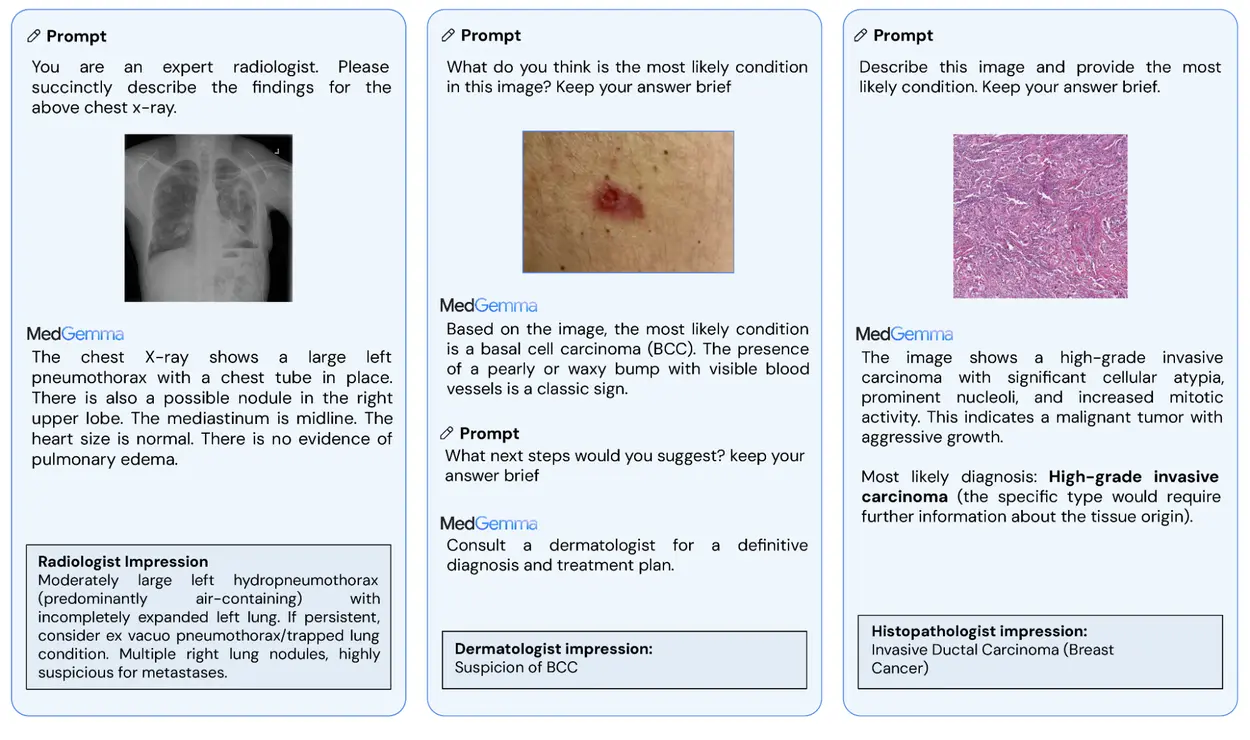

MedGemma is Google Research’s latest addition to the Health AI Developer Foundations (HAI‑DEF), featuring open-source, multimodal AI models tailored for the medical domain. Built atop the powerful Gemma 3 architecture, MedGemma extends capabilities into imaging, electronic health records (EHRs), and traditional medical text.

🆕 New Launches

-

MedGemma 27B Multimodal

- Interprets combined long-form patient records and medical images (e.g. chest X‑rays, histopathology, dermatology, fundus photography)

- Achieves 87.7% accuracy on the MedQA benchmark, surpassing even larger models at a fraction of the compute cost.

-

MedSigLIP

- A compact 400M‑parameter encoder designed to embed both medical images and text into a shared representation.

- Enables classification, zero‑shot prediction, and semantic retrieval across modalities.

📊 MedGemma Performance

| Model | Parameters | Input Types | MedQA Score |

|---|---|---|---|

| 4B Multimodal | 4B | Image + Text | 64.4% |

| 27B Text-only | 27B | Text | 87.7% |

| 27B Multimodal | 27B | Image + Text | 87.7% |

- Chest X‑ray Reporting: MedGemma 4B’s generated reports were sufficient for patient care in 81% of clinical validations—matching human radiologists in quality.

🔧 Why Open Matters

- Privacy & Deployment Freedom: Can run on local devices or in the cloud, preserving data privacy.

- Highly Customizable: Fine-tune for unique clinical needs—e.g., Traditional Chinese Medicine, urgent X-ray triage.

- Reproducible Results: Released as open-source checkpoints for stable, community-driven development.

- Accessible Ecosystem: Available on Hugging Face, Vertex AI, and GitHub for developers everywhere.

👩 Real‑World Uses

- DeepHealth (US): Applied to chest X-ray triage and nodule detection.

- Chang Gung Hospital (Taiwan): Adapted for Traditional Chinese Medicine texts.

- Tap Health (India): Used for summarizing medical notes and clinical recommendations.

📈 Why It Matters

-

World‑class healthcare, on your phone. MedGemma 4B is lightweight enough to run on consumer devices, opening new possibilities for low-resource settings.

-

Lower barriers to innovation. Small clinics and underserved regions gain access to cutting-edge healthcare AI without proprietary barriers.

-

Trustworthy AI innovation. Transparent, open releases promote safer and more collaborative medical AI progress.

🧭 How to Get Started

- Explore on:

- GitHub

- Hugging Face

- Google Vertex AI

- Choose your variant:

- 4B for lightweight mobile apps

- 27B for clinical reasoning and deep diagnostic tasks

- Fine-tune or prompt-engineer for your use case.

🛑 Caveats & What’s Next

- Not a medical device—clinical use requires local validation.

- Areas of active development: multi-image input, non-English support, multi-turn reasoning.

🧠 Bottom Line

Google’s MedGemma models mark a major milestone in healthcare AI: multimodal, accurate, open, and accessible. From chest X-rays to dermatology and clinical reasoning, MedGemma enables powerful AI-assisted care, even on small devices.

Original post: > MedGemma: Our most capable open models for health AI development →