AI Robot Masters Surgical Tasks: The Future of Robotic-Assisted Surgery Unveiled at Johns Hopkins University

In an era where artificial intelligence is redefining possibilities in medicine, researchers at Johns Hopkins University have pushed the boundaries by enabling a surgical robot to master complex medical procedures. Remarkably, this robot achieved expertise solely by observing videos of human surgeons in action — a method that could reshape surgical training, precision, and access to skilled medical care worldwide.

From Observation to Operation: Training the Robot

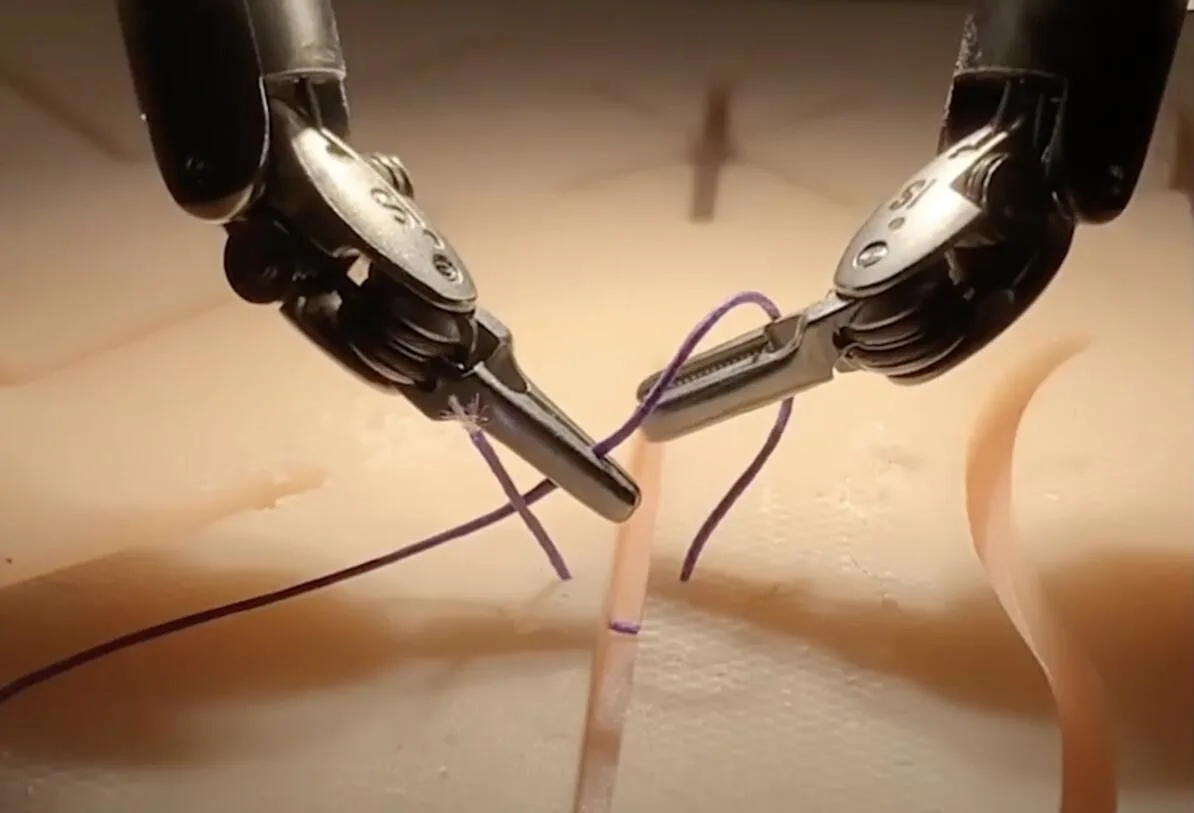

The breakthrough was achieved using the da Vinci Surgical System, a widely used robotic surgical platform that has assisted countless surgeons in minimally invasive procedures. But instead of being operated by a human, this time, the da Vinci robot took on a learning role. By analyzing hundreds of videos captured through its own wrist cameras, the system observed and internalized the mechanics and intricacies of tasks like needle manipulation, tissue handling, and suturing.

Unlike traditional training methods, which often rely on meticulously programmed step-by-step commands, this robot learned through a process called imitation learning. With a high volume of surgical videos, the robot’s AI model gradually built an understanding of the physical and cognitive dimensions of surgery, using mathematical algorithms to replicate the fine motor skills seen on screen.

Blending AI and Surgical Kinematics: A "Surgical Language"

The AI model used in this project is inspired by language-processing architectures like ChatGPT, designed to understand and predict patterns in data. For the surgical robot, these patterns were found in the tiny, precise movements of human surgeons. By integrating these visual and kinematic observations, the system learned to “speak surgery” in a sense, combining the science of motion with the art of delicate maneuvers.

The robot even demonstrated an impressive level of adaptability. For example, when a needle slipped out of its grasp, it automatically retrieved it — a complex response that no one explicitly programmed. This demonstrates an inherent learning flexibility that allows for error correction and recovery, a key trait that could make robotic systems in surgery far more autonomous and resilient.

A Milestone for Surgical Robotics

This achievement at Johns Hopkins highlights the potential of AI-driven robotics to overcome traditional limitations in surgery. Currently, robotic systems are typically operated by surgeons, requiring extensive training and specialized skills. However, with this model, there’s a clear pathway to a future where robots can autonomously assist or even perform routine surgical tasks with minimal oversight, freeing up human surgeons for more intricate, high-stakes procedures.

Why Video-Based Training Matters

The approach of using video-based training could transform the field in the same way large language models revolutionized AI. Imagine a system where, instead of coding each action and response, robots gain proficiency by “watching” and practicing procedures from an endless repository of surgeries worldwide. Such adaptability could allow for cross-specialization, enabling one robot to assist with different types of surgeries simply by watching the relevant training videos.

Implications for the Future of Healthcare

The broader implications of this development are significant. As robotic systems learn to perform an increasing range of medical procedures, healthcare access may improve, particularly in regions where skilled surgeons are scarce. Hospitals could soon deploy robotic systems that independently conduct surgeries such as biopsies, organ repairs, and other routine procedures, potentially reducing wait times and enhancing precision.

Moreover, this innovation paves the way for cost-effective training solutions. Medical students and surgeons could benefit from simulation-based learning alongside video-trained robots, building a collaborative ecosystem where humans and machines push the boundaries of surgical capabilities.

Looking Ahead

As the AI model at Johns Hopkins University refines its abilities, we are likely at the dawn of a new era in robotic-assisted surgery. Through ongoing research and improvements, these systems could soon incorporate other sensory data, such as haptic feedback, to further refine their accuracy and efficacy in surgery. Additionally, this breakthrough underscores the potential for AI and robotics to reshape every stage of patient care, from diagnostics to recovery.

The Johns Hopkins experiment represents a seismic shift in medical robotics, ushering in a future where technology and human ingenuity work hand-in-hand to provide faster, safer, and more accessible healthcare for all.

Reference: Johns Hopkins University - Hub