RadAI Slice Newsletter Weekly Updates in Radiology AI |

Good morning, there. An AI-enhanced CT model stratified HCC risk in 2,411 cirrhosis patients with AUC up to 0.869. AI-driven imaging risk tools could proactively identify patients likely to develop liver cancer, enabling earlier surveillance and tailored management. This large, multicenter study demonstrates externally validated benefit over standard models, supporting clinical adoption for HCC risk stratification. Integration of robust radiomics approaches in routine imaging may standardize and personalize oncologic care. PS: If this touches your work, hit Reply with one note. I read every message.

Here's what you need to know about Radiology AI last week: AI-enhanced CT boosts HCC risk prediction for cirrhosis patients FDA requests radiologist input on real-world AI device safety False-positive findings differ between AI and radiologists in breast DBT Less than 30% of FDA-cleared radiology AI devices report preapproval safety data Plus: 10 newly released datasets, 5 FDA approved devices & 4 new papers.

|

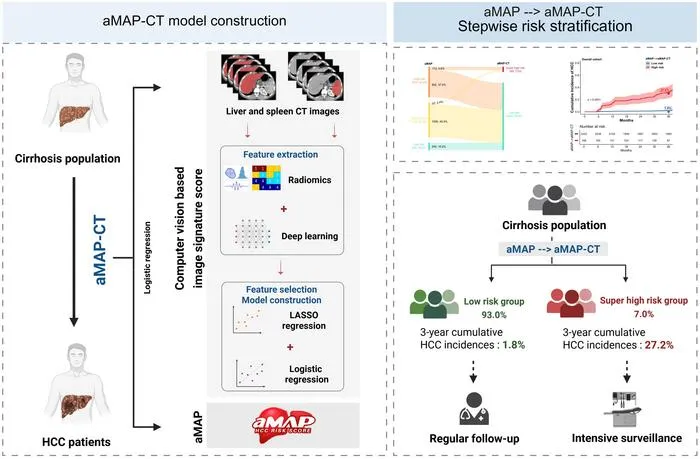

🧬 AI-enhanced CT boosts HCC risk prediction for cirrhosis patients  Image from: EurekAlert RadAI Slice: A multicenter AI CT model stratifies hepatocellular carcinoma risk better than clinical criteria. The details: 2,411 cirrhosis patients from multiple centers (2018–2023) All patients had 3-phase CT at baseline AI model AUC: 0.809–0.869, better than clinical models High-risk group: 26.3% HCC in 3 years; low-risk: 1.7% Identified 7% of patients with very-high risk of HCC

Key takeaway: Robust CT-based AI models can personalize HCC surveillance, identifying high-risk and low-risk cirrhosis patients more accurately than current clinical models. |

📋 FDA requests radiologist input on real-world AI device safety  Image from: AI in Healthcare RadAI Slice: FDA opens public comment for monitoring AI in radiology and medical imaging devices. The details: Comment window: Sept 30–Dec 1 for real-world device monitoring Focus on detection/mitigation of data and model drift Most FDA evaluations rely on static, retrospective testing User feedback sought to boost clinical safety of AI tools

Key takeaway: Broader post-market surveillance and radiologist feedback are key to maintaining AI imaging tool safety and effectiveness as adoption increases. |

🧩 False-positive findings differ between AI and radiologists in breast DBT RadAI Slice: Head-to-head study shows different false positive profiles for AI vs radiologists in breast DBT. The details: Sample: 2,977 women, 3,183 exams (2013–2017) False-positive rates nearly identical (9.7% AI vs 9.5% radiologist) AI-only: more benign calcifications; radiology-only: more masses Concordant findings high-risk in 44% of biopsies

Key takeaway: Knowing which findings trigger false positives for AI vs radiologists can guide tool improvement, reduce unnecessary recalls, and optimize breast cancer screening. |

📝 Less than 30% of FDA-cleared radiology AI devices report preapproval safety data  Image from: Radiology Business RadAI Slice: Systematic review exposes a gap in safety reporting for AI radiology devices cleared by the FDA. The details: Reviewed 691 AI/ML devices (1995–2023); 531 in radiology <30% provided safety, efficacy, or adverse event data No predefined safety or efficacy standards for AI/ML devices in US Calls for stricter approval/testing for imaging AI devices

Key takeaway: Regulatory transparency and robust safety data reporting must improve for FDA-cleared AI radiology devices to maintain patient trust and safe implementation. |

CardioBench (2025-10-01) Modality: US | Focus: Cardiac, pediatric | Task: Regression, classification Size: Data from 8 datasets: >40,000 echo videos/images, >30,000 patients Annotations: Regression (ejection fraction, chamber dimensions, wall thickness); classification (disease, pathology, view); expert and automated labels Institutions: MBZUAI, Stanford AIMI et al. Availability: Highlight: First standardized benchmark aggregating 8 open echo datasets for multi-task, multi-view echocardiography AI

|

MedQ-Bench (2025-10-02) Modality: CT, MRI, X-ray, Endoscopy, Fundus, Histopathology | Focus: multi-organ, general body regions | Task: quality assessment, visual reasoning Size: 3,308 images, 5 modalities, multi-source, balanced by task/degradation Annotations: Multi-type: perception (MCQA with Yes/No, What, How Q&A), detailed expert-written quality reasoning, pairwise comparison labels Institutions: Fudan University, Shanghai Artificial Intelligence Laboratory, et al. Availability: Highlight: First benchmark for human-like reasoning and perception in medical image quality across 5 modalities with expert-aligned annotation.

|

SpurBreast (2025-10-02) Modality: MRI | Focus: Breast | Task: Classification, Generalization Robustness Size: 900+ 3D scans, 900+ patients; 250 slices per scan Annotations: Tumor presence (slices labeled positive/negative); 100+ patient and imaging metadata features Institutions: Ghent University Global Campus, IDLab Ghent University et al. Availability: Highlight: Curated real breast MRI datasets with controlled spurious correlations for bias and robustness research

|

Hemorica (2025-09-26) Modality: CT | Focus: Brain | Task: Classification, Segmentation Size: 372 head CT scans, 372 patients, 12,067 slices Annotations: Five ICH subtypes with patient- and slice-level labels, bounding boxes, 2D and 3D segmentation masks Institutions: Rasoul Akram Hospital, K.N. Toosi University of Technology Availability: Highlight: Fine-grained public dataset: subtype, masks, boxes, consensus annotation for ICH.

|

ViMed-PET (2025-09-29) Modality: PET/CT | Focus: Whole body (oncology), Lung | Task: Report generation, VQA Size: 1,567,062 scans, 2,757 patients Annotations: Full-length Vietnamese clinical reports; expert-curated lung cancer test set with structured labels Institutions: Hanoi University of Science and Technology, 108 Military Central Hospital et al. Availability: Highlight: First large-scale paired PET/CT-reports dataset in Vietnamese; rich multimodal and multilingual resource

|

MetaChest (2025-10-01) Modality: X-ray | Focus: Chest, Lungs | Task: Classification, Few-shot learning Size: 479,215 chest X-ray images from 322,475 patients Annotations: Multi-label pathology annotations for 15 common chest diseases Institutions: Instituto de Investigaciones en Matemáticas Aplicadas y en Sistemas, UNAM et al. Availability: Highlight: Largest chest X-ray few-shot/meta-learning benchmark; unified meta-set with algorithmic episode generation

|

LMOD+ (2025-09-30) Modality: CFP, SLO, OCT, LP, SS | Focus: Retina, Lens | Task: Classification, Segmentation Size: 32,633 images; 10 datasets; over 11,000 patients (estimated, varies by source); 5 modalities Annotations: Multi-granular labels: disease diagnosis, disease stage, anatomical region bounding boxes, demographics (age, sex), free text Institutions: Yale University, Australian National University et al. Availability: Public (link to be announced)

Highlight: Largest, most diverse multimodal benchmark for AI in ophthalmology; tasks include diagnosis, staging, anatomical structure, and demographic prediction.

|

BreastDCEDL AMBL (2025-05-30) Modality: MRI | Focus: Breast | Task: Classification, Segmentation Size: 133 lesions (89 benign, 44 malignant) from 88 patients Annotations: Manual lesion segmentations (benign and malignant), NIfTI masks Institutions: Ariel University, The Cancer Imaging Archive (TCIA) Availability: Highlight: First public DCE-MRI dataset with both benign and malignant lesion annotations; supports benchmarking benign-malignant classification.

|

TEMMED-BENCH (2025-09-29) Modality: X-ray | Focus: Chest | Task: Temporal reasoning, VQA Size: 18,144 image pairs from 9,072 patients; test set: 1,000 cases Annotations: Report labels describing condition change, VQA (yes/no), image-pair selection Institutions: University of California Los Angeles, Amazon Availability: Highlight: First benchmark for temporal medical image reasoning; real-world, multi-visit chest X-ray pairs

|

Neural-MedBench (2025-09-26) Modality: MRI, CT | Focus: brain, neurology | Task: clinical reasoning, diagnosis Size: 120 cases, 200 tasks, 120 patients Annotations: expert-curated diagnoses, reasoning justifications, lesion labels Institutions: Guangdong Institute of Intelligence Science and Technology, Beijing Chaoyang Hospital et al. Availability: Highlight: Multimodal, reasoning-intensive neurology cases with structured justifications; designed as a high-density stress test for clinical reasoning in AI.

|

🏛️ FDA Clearances K251483 - SwiftSight-Brain by AIRS Medical Inc. (510k) aids radiologists with automatic brain image analysis for diagnostic support. K251527 - Brain WMH (Quantib BV, 510k) automates white matter hyperintensity segmentation on brain MRI for lesion quantification. K251987 - Rapid Aortic Measurements (iSchemaView, 510k) uses CT to segment and measure the aorta, supporting aortic dissection diagnosis. K252105 - Ligence Heart (Ligence, UAB, 510k) enables AI-driven cardiac segmentation and quantification from CT imaging. K250045 - uWS-Angio Pro (United Imaging, 510k) processes multimodal angiographic images—image synthesis and quantitative vessel analysis. Explore last week's 10 radiology AI FDA approvals.

|

📄 Fresh Papers doi:10.1186/s12911-025-03193-3 - Multicenter study develops and validates interpretable deep learning and nomogram for grading PNETs using EUS images with high AUC and clinical utility. doi:10.1038/s43587-025-00962-7 - Genome-wide study in 56,348 individuals links 59 loci to MRI-predicted brain 'age gap' and ties high blood pressure and diabetes to accelerated brain aging. doi:10.1097/RLI.0000000000001186 - Real-world, prospective study shows DL super-resolution cine cardiac MRI halves exam time and matches standard cine quality, even with arrhythmia. doi:10.1016/j.ultrasmedbio.2025.09.002 - Meta-analysis finds MRI radiomics models predict HIFU efficacy in uterine fibroids (AUC=0.84), but notes data/reporting gaps limit generalizability. Browse 258 new radiology AI studies from last week.

|

That's it for today! Before you go we’d love to know what you thought of today's newsletter to help us improve the RadAI Slice experience for you. |

|

👋 Quick favor: drag this into your Primary tab so you don’t miss next week. Or just hit Reply with one thought. See you next week. |

|